System Design - Design Uber Ridesharing System

Functional Requirements

- The system should support two types of clients: Drivers and Riders.

- A Rider should be able to see all nearby drivers.

- A Rider should be able to request a ride from their current location by specifying the destination.

- A Driver should be able to accept an incoming ride request from a Rider.

Non-Functional Requirements

- Availability: The system should be available for riders to book rides.

- Latency: The system should provide low latency for riders booking rides and for drivers accepting ride requests.

- Scalability: The system should be capable of supporting an increasing number of drivers and riders, as well as managing demand during peak and off-peak hours.

- Consistency: Once a driver accepts a ride, it should immediately become invisible to other drivers. The system must ensure that ride statuses and location updates are consistent across both rider and driver applications.

Capacity Requirements

- Assume there are around 100 million riders and 10 million drivers

- Assume there are around 10 million daily active riders and 1 million daily active drivers.

- Assume there are 10 million ride requests per day.

Assuming that the user metadata is 500 bytes per user: 500 bytes/user * 100,000,000 users = 50,000,000,000 bytes, which equals 50 GB of storage.

With 10 million rides per day, and each ride requiring 100 bytes to store ride information: 100 bytes/ride * 10,000,000 rides/day = 1,000,000,000 bytes/day, which is equivalent to 1 GB of storage per day.

Over the span of 5 years, accounting for 365 days per year, the storage calculation would be: 1 GB/day * 365 days/year * 5 years = 1,825 GB, which is approximately 2 TB of storage needed for 5 years.

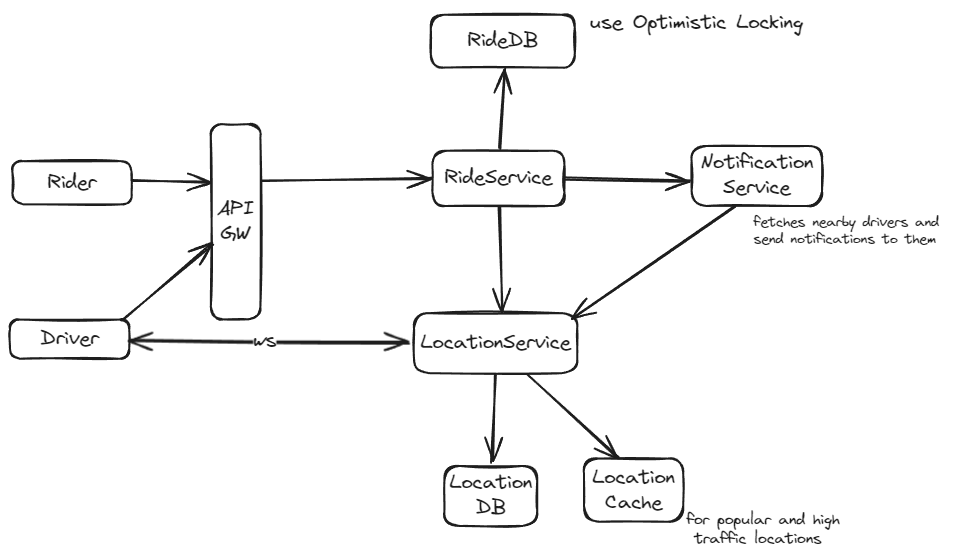

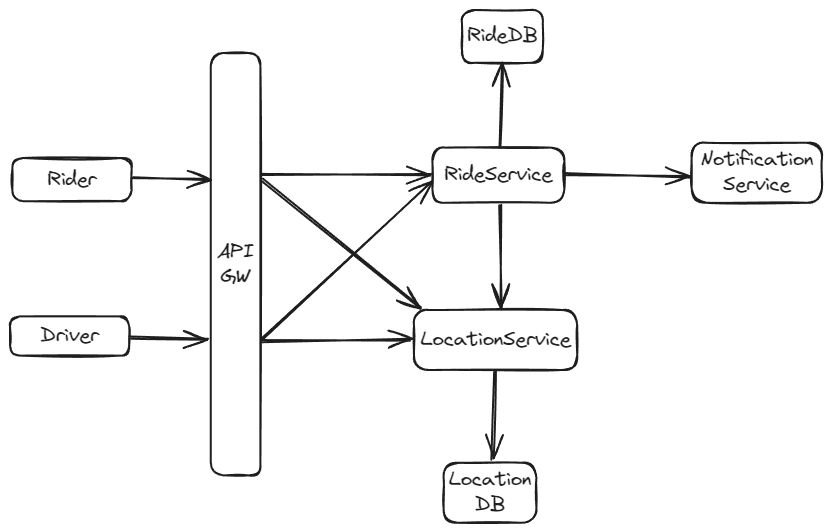

High Level Design

Design Choices

1. Driver Sharing Location

Approach 1: Simple HTTP API Calls for Sharing Location

In this approach, the driver's app sends the driver's current location to the server at regular intervals using HTTP POST requests. This is the most straightforward way to send data over the internet and does not require maintaining a connection between the driver's app and the server.

Pros:

- Simple to Implement: This method leverages the HTTP protocol, which is well-known and widely supported, making it easy to use with many available libraries and tools.

- Scalable on the Server Side: Stateless requests mean that each request is independent. Servers don't need to maintain session state, which simplifies scaling. Load balancers can distribute incoming requests to any available server without worrying about a user's prior interactions.

Cons:

- Network Overhead: Each HTTP request includes headers and other protocol information. Because location updates occur frequently, this overhead can accumulate, using more bandwidth than necessary.

- Battery Drain: Smartphones must wake up the network interface for each location update sent, leading to increased battery usage.

- Latency: HTTP connections require a handshake each time they're established. For frequent updates, this setup time can introduce latency, potentially delaying location updates.

Approach 2: Persistent WebSocket Connection for Sharing Location (Preferred)

This method involves establishing a WebSocket connection between the driver's app and the server. Once this connection is established, it remains open, allowing for two-way communication with minimal overhead.

Pros:

- Low Network Overhead: WebSockets reduce the amount of data sent over the network because, after the initial handshake, only the data payload is sent without additional headers required by HTTP.

- Real-Time Communication: WebSocket connections allow for data to be sent and received almost instantly, providing real-time updates which are crucial for displaying a driver's location to riders.

- Battery Efficiency: Since the connection is persistent, there's no need to repeatedly wake up the device's networking hardware, which can lead to better battery performance compared to the repeated wake-ups required for frequent HTTP requests.

Cons:

- Implementation Complexity: Setting up and managing WebSocket connections can be more complex than simple HTTP requests. It requires handling cases where the connection drops and needs to be re-established.

- Resource Usage on the Server: Persistent connections consume more server resources because each connection requires a dedicated socket and memory to maintain its state. This can be particularly challenging when scaling to handle millions of concurrent connections.

- Concurrency Management: Managing a large number of open, concurrent WebSocket connections can be complex. It requires careful design to ensure that all connections are handled efficiently and without exhausting server resources.

Using Persistent WebSockets reduces the need for repeated network wake-ups, thus conserving battery life on driver devices. Additionally, this method minimizes network overhead after the initial connection is established, making it a more bandwidth-efficient solution that is well-suited to handle our scale and real-time operational demands.

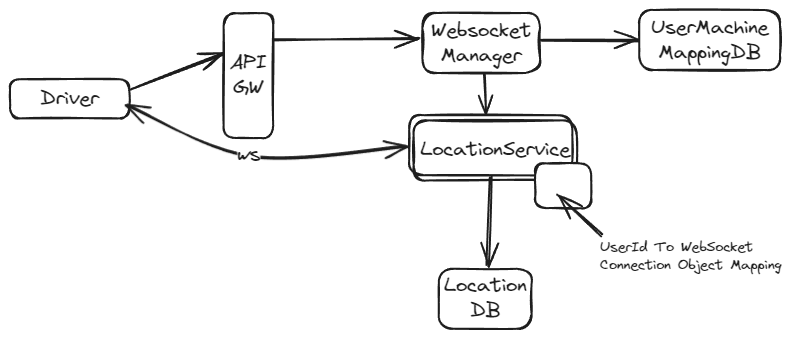

NOTE: Two Approaches with WebSocket:

- Use a Load Balancer with an IP Hash Algorithm (Sticky Sessions) to determine the server instance where the WebSocket connection is maintained.

- Use an independent WebSocket Manager that maintains the mapping of each user to the appropriate server instance for their location.

2. Rider Getting Nearby Drivers

Approach 1: Polling to Get All Nearby Drivers

The rider's app periodically sends HTTP GET requests to the server to fetch the latest locations of drivers near their current location. This polling is done at regular intervals (e.g., every 30 seconds), regardless of whether there have been any changes in driver locations.

Pros:

- Simplicity: Implementing this approach is straightforward. It uses basic HTTP requests which are easy to set up and manage.

- Compatibility: Works on all platforms and doesn't require maintaining a continuous connection, which simplifies client-side logic.

Cons:

- Network and Battery Consumption: Each poll sends a complete HTTP request with headers, which consumes more data and more battery due to the radio having to power up frequently.

- Latency: There's inherent latency because of the time taken to establish a connection and wait for the server's response. Updates only occur at the interval frequency, so the information may not be current.

Approach 2: Persistent WebSocket Connections for Sharing Driver Locations (Preferred)

A persistent WebSocket connection is established between the rider's app and the server. This connection remains open, allowing real-time updates of drivers' locations to be pushed from the server as they change. This method provides a continuous flow of data and updates without needing to repeatedly request them.

Pros:

Real-Time Updates: Changes in drivers' locations are sent immediately to the rider's app, providing up-to-the-minute accuracy.

- Reduced Overhead: Once the WebSocket connection is established, data can be sent with minimal additional overhead, saving bandwidth.

- Battery Efficiency: More efficient on battery life compared to polling because the device maintains one constant connection without the frequent power spikes associated with establishing new HTTP connections.

Cons:

- Implementation Complexity: WebSockets are more complex to set up and require handling cases like connection drops and reconnecting, which can complicate the client and server-side code.

- Scalability and Management: Maintaining a large number of open WebSocket connections can be challenging as it requires a robust server infrastructure that can handle many simultaneous connections without degrading performance.

In the context of a ride-sharing app, where timely updates can greatly enhance user experience and operational efficiency, the WebSocket approach is generally preferred despite its higher complexity. This preference is due to its real-time capabilities and lower overall resource consumption once the initial setup challenges are addressed.

3. Real-time push location information to Riders

Approach 1: Synchronous Calls for Real-Time Updates

Whenever a driver updates their location, the system immediately makes synchronous backend calls to identify all nearby riders and then pushes the location update to these riders.

Pros:

- Straightforward Implementation: This approach is linear and easier to conceptualize and implement since it follows a direct cause (location update) and effect (notification to riders) model.

- Immediate Feedback: Provides immediate updates, which is essential for applications requiring up-to-the-minute accuracy.

Cons:

- Scalability Issues: Handling everything synchronously can severely limit the system’s ability to scale, as each update requires immediate processing power and can lead to bottlenecks.

- Increased Latency: The time taken to process the update, determine which riders are affected, and then push out notifications can introduce significant delays.

- Resource Intensity: This method places a high demand on backend resources, potentially affecting overall system performance, especially during peak times.

Approach 2: Kafka for Asynchronous Processing

Driver location updates are sent to a Kafka queue. A fleet of worker services or task runners then process these messages asynchronously, identify nearby riders, and send them the updates.

Pros:

- Enhanced Scalability: By decoupling data ingestion from data processing, Kafka allows the system to handle higher volumes of data without degradation of performance.

- Fault Tolerance: Kafka’s built-in durability and replication mechanisms ensure that data is not lost even in the event of hardware failures.

- Load Management: Acts as a buffer to manage data flow during peak traffic times, preventing system overload.

Cons:

- System Complexity: Introduces complexity in the system’s architecture, requiring specialized knowledge for setup, monitoring, and maintenance.

- Potential Latency: While Kafka is efficient, the asynchronous nature of the processing might introduce a small delay in updating the riders, dependent on the queue length and processing time.

- Operational Demands: Requires ongoing management of Kafka clusters, including topics, partitions, and balancing across consumers to maintain optimal performance.

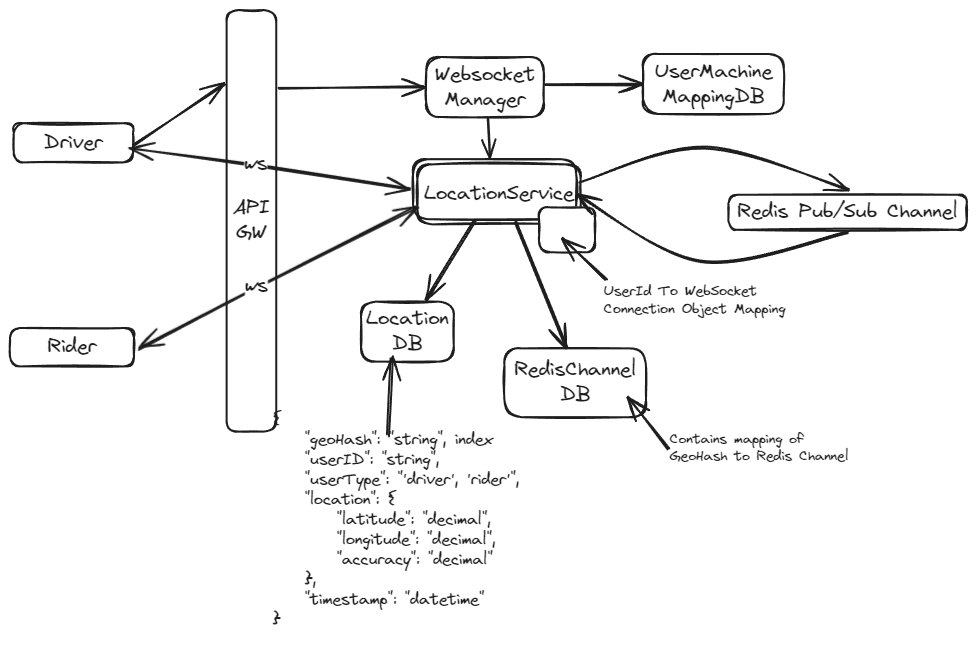

Approach 3: Redis Pub/Sub with Geohash Channels (Preferred)

Each driver’s location update is published to a Redis Pub/Sub channel that corresponds to a geohash. Riders subscribe to the geohash channels relevant to their current location to receive updates.

Pros:

- Low Latency: Redis is optimized for high throughput and low latency, making it ideal for real-time applications.

- Scalable Message Distribution: By using geohash-based channels, messages are only sent to relevant subscribers, which efficiently scales as user numbers increase.

- Simple Subscription Logic: Allows for dynamic subscription management where riders can enter or exit geohash channels based on their movements, simplifying overall message handling.

Cons:

- Granularity Issues: The effectiveness of this method can depend heavily on the chosen granularity of geohashes. Too broad might result in irrelevant updates; too narrow could complicate management.

- Lack of Persistence: Redis does not store messages once they have been delivered. If a subscriber is disconnected, they miss those messages.

- Resource Use: High subscription numbers and frequent message publications can strain Redis resources, necessitating careful tuning and possibly limiting other use cases.

Choosing the right approach depends on your specific requirements regarding real-time responsiveness, system scalability, and operational complexity. For most real-time applications that need scalable and efficient message distribution, Approach 3 (Redis Pub/Sub) could be highly effective. However, if message persistence and fault tolerance are critical, Approach 2 (Kafka) offers significant advantages. Approach 1 might be suitable for smaller or less complex environments where immediate processing is prioritized over scalability.

4. Booking Rides