System Design - Design Realtime Chat System

Functional Requirements

- The system should support both one-on-one and group chats.

- Users must be able to send and receive messages with near-real-time delivery.

- Users must have the ability to create groups.

- Users should be permitted to join and leave groups as they wish.

- Users should have access to their complete chat history for the entire lifetime of the chat.

- The chat functionality will be limited to text messages only.

- The system should send notifications to users who are offline.

Non-Functional Requirements

- Availability: The system should enable users to send and receive messages without interruptions. Once a connection is established, users must maintain a stable connection with the chat service for real-time messaging capabilities.

- Scalability: The system must be scalable to support a large number of users and a high volume of concurrent connections.

- Latency: The system should deliver messages in near real-time, necessitating low latency.

- Consistency: The system should ensure eventual consistency for message delivery.

Capacity Requirements

- Assume there are around 1 billion users with 100 million daily active users

- Assume that on average, the message size is 100 bytes

- Assume there are 10 messages per user per day.

Assuming that the user metadata is 500 bytes per user: 500 bytes/user * 1,000,000,000 users = 500,000,000,000 bytes, which equals 500 GB of storage.

With 10 messages per user per day, and each message requiring 100 bytes of storage: 100 bytes/message * 10 messages/day * 100,000,000 daily active users = 100,000,000,000 bytes/day, which is equivalent to 100 GB of storage per day.

Over the span of 5 years, accounting for 365 days per year, the storage calculation would be: 100 GB/day * 365 days/year * 5 years = approximately 200 TB of storage needed for 5 years.

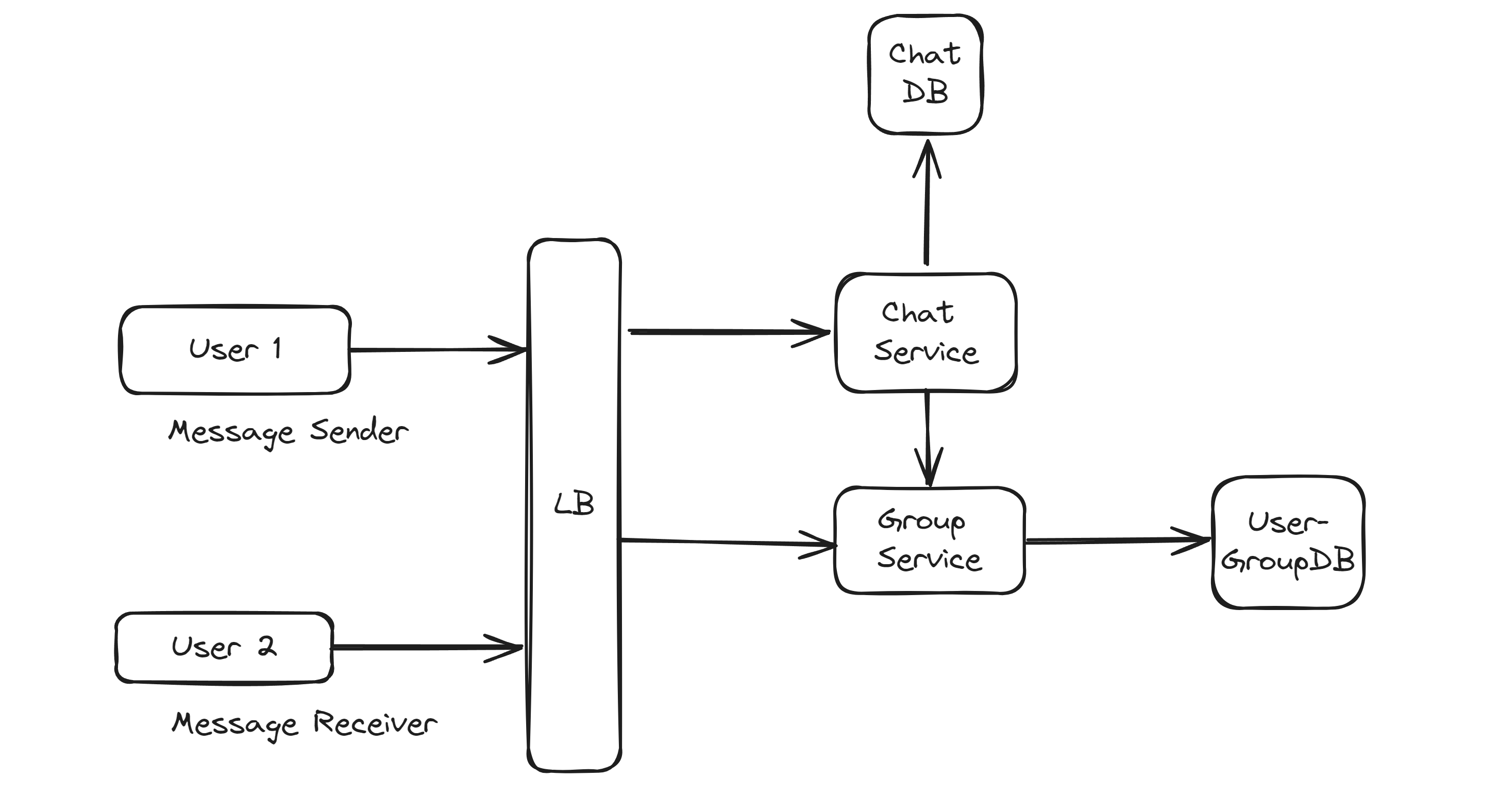

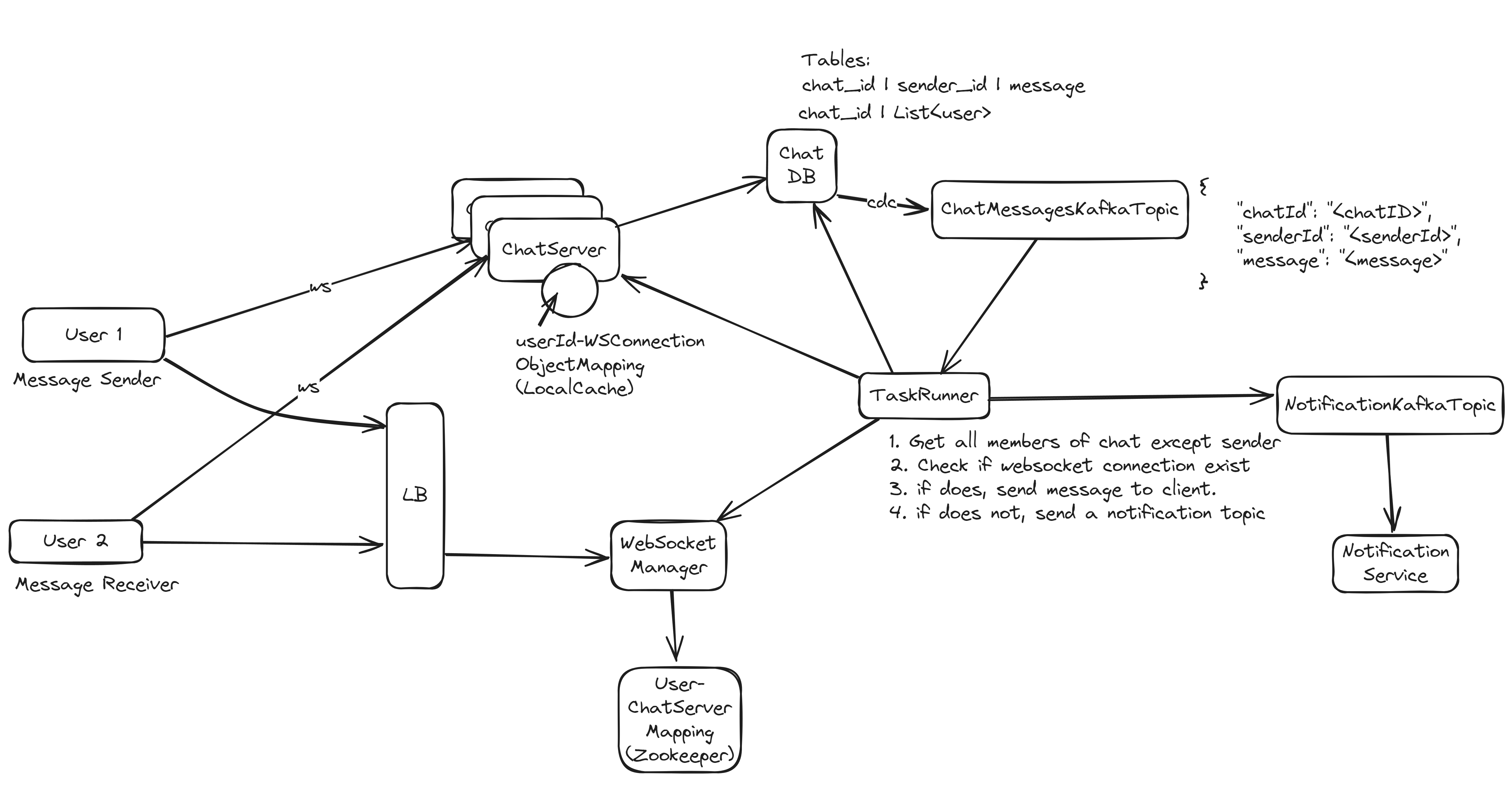

High Level Design

Design Choices

1. Receiving Real-Time Messages

Approach 1: Short Polling

In short polling, the client frequently sends HTTP GET requests to the server to check for new messages. This is a simple technique where the client "polls" the server at regular intervals to fetch updates.

Implementation involves setting a timer on the client side that triggers HTTP requests periodically. The server checks for new data at each poll request and returns any available messages to the client. The polling frequency can be configured but needs to balance between responsiveness and server load.

Pros:

- Short polling is straightforward and does not require complex backend infrastructure or specialized protocols.

- It's universally supported since it relies on basic HTTP and does not need any special features from the web browser.

Cons:

- Polling intervals introduce delays because updates are only checked at those specific times, not continuously.

- High frequency of polling can lead to a large number of redundant requests, putting strain on server resources and network bandwidth.

Approach 2: Long Polling

Long polling is an enhanced version of short polling. The client sends a request to the server, which holds the request open until new data is ready to be sent.

Upon receiving a client request, the server does not immediately respond with an empty resource if no data is available. Instead, the server waits and holds the request open until new messages arrive or a timeout threshold is reached. When new data is available, the server sends a response back to the client, which then immediately issues another request, and the process repeats.

Pros:

- Long polling reduces the latency in message delivery compared to short polling as the server sends updates as soon as they are available.red to the repeated wake-ups required for frequent HTTP requests.

Cons:

- It can be resource-intensive because the server must manage many persistent connections, especially with a high number of clients.

- Despite being more real-time than short polling, long polling can still introduce slight delays in message delivery.

Approach 3: Server-Sent Events (SSE)

Server-Sent Events enable the server to push data to the client over a single long-lived HTTP connection.

The client initiates an SSE connection by making a standard HTTP request to the server, which then keeps the connection open. SSE allows the server to continuously send data to the client in a one-way stream, with the client acting as a subscriber to the server's updates. This connection can stay open indefinitely, allowing the server to send real-time updates as they occur.

Pros:

- SSE is straightforward to set up on the server side and generally easier to work with than WebSockets because it leverages standard HTTP.

- The protocol has built-in features for automatically retrying connections, making it robust against temporary network failures.

Cons:

- Because SSE is inherently unidirectional, it is not suitable for scenarios where the client needs to send data to the server frequently.

- Browser support is not as universal as traditional polling methods, with some browsers lacking native SSE support.

Approach 4: WebSocket

WebSocket provides a full-duplex communication channel that allows for a continuous connection between the client and server.

The client establishes a WebSocket connection by sending a special HTTP request that includes an 'Upgrade' header to request a protocol switch from HTTP to WebSocket. Once the server accepts this upgrade request, the protocol switch is made, and the WebSocket connection remains open for two-way communication until explicitly closed by either the client or server.

Pros:

- WebSockets support full-duplex messaging, enabling both the client and server to send messages independently and simultaneously.

- After the initial handshake, the overhead of additional HTTP headers is eliminated, making WebSocket communication much more efficient than traditional HTTP requests.

Cons:

- The WebSocket protocol is more complex to implement, with additional considerations for connection lifecycle management, error handling, and security.

- Server infrastructure must be capable of handling persistent connections and may require specialized proxying and load-balancing solutions that understand WebSocket traffic.

Based on the above solutions, there are two ways we can setup a real-time chat system.

Approach 1: Stateless API for Sending + Server-Sent Events (SSE) for Receiving

In this hybrid approach, clients use a standard HTTP-based stateless API for sending messages and Server-Sent Events (SSE) for receiving updates from the server.

Pros:

- Scalability of Sending: Utilizing a stateless API for sending messages allows the system to handle large volumes of outgoing messages with ease. This is because stateless interactions do not require the server to maintain any client context, making it easier to distribute the load across multiple servers.

- Battery Efficiency: SSE is known to be more efficient in terms of battery usage on mobile devices since it does not require a two-way communication channel. The server streams data to the client without the need for the client to send periodic heartbeats or acknowledgments.

Cons:

- Unidirectional Limitation: With SSE, the server cannot receive data from the client over the same connection. Thus, clients need to make a separate HTTP request to send messages, which introduces additional complexity and slight delays due to the need for handling multiple connections.

- Multiple Connections: Using SSE for receiving messages and a separate stateless API for sending them necessitates maintaining at least two connections (one for SSE and one for the API) for each client. This can increase the client’s resource usage, complicating client-side connection management.

- Browser Support: SSE lacks support in some browsers, which might necessitate fallback solutions or polyfills to ensure functionality across all user devices.

Approach 2: WebSocket for Bidirectional Communication (Preferred)

WebSockets provide a full-duplex communication channel, allowing clients to send and receive messages over a single persistent connection.

Pros:

- Real-Time Interaction: WebSocket connections enable real-time interactions with significantly lower latency compared to the combination of SSE and stateless API. This is crucial for features like typing indicators or real-time collaboration.

- Reduced Overhead: Once the WebSocket connection is established, the protocol's overhead for sending and receiving messages is minimal, making data transfer more efficient than traditional HTTP requests.

- Single Persistent Connection: Only one connection is required for both sending and receiving messages. This simplifies client-side connection management and reduces the resource overhead associated with maintaining multiple connections.

Cons:

- Complexity in Management: The implementation of WebSocket connections can be complex due to the need to handle various scenarios like connection drops, retries, and maintaining the order of messages.

- Battery Usage: Persistent WebSocket connections, which keep the device's networking hardware active, may lead to increased battery usage, especially on mobile devices. This is more pronounced if the application frequently sends or receives data.

- Infrastructure Considerations: Establishing and maintaining WebSocket connections can put a strain on the server infrastructure. It requires careful consideration regarding connection limits, memory usage, and network throughput to ensure that the WebSocket server can scale effectively.

Approach 1 might be preferred for applications where battery life is a priority and server-to-client updates are more frequent than client-to-server messages. Approach 2 is typically favored for highly interactive applications that benefit from the lowest possible latency and where the complexity of handling WebSockets can be justified by the user experience gains.

3. Scaling Web Socket Servers

Approach 1: Load Balancer with IP-Based Hashing (Sticky Sessions)

This approach employs a load balancer to distribute incoming WebSocket connections to multiple servers. IP-based hashing is used to maintain session persistence, commonly known as sticky sessions.

Pros:

- Ease of Configuration: This approach is typically easy to set up because many load balancers support sticky sessions natively.

- Consistent User Experience: Users maintain a continuous connection with the same server for the duration of their session, which can simplify session management and provide a consistent user experience.

- Horizontal Scaling: The ability to add more WebSocket servers behind the load balancer to handle increased traffic is a straightforward process, facilitating horizontal scaling.

Cons:

- Dependency on IP Address: If a user's IP address changes during a session, such as when moving between networks, they may lose their session as the load balancer routes them to a different server.

- NAT Issues: In environments where multiple users appear to have the same IP address due to Network Address Translation (NAT), the load balancer might route all those users to the same server, leading to imbalanced server utilization.

- Session Affinity: This method relies on the assumption that all requests from a user will come from the same IP address, which might not hold true in certain mobile or proxy scenarios.

Approach 2: ZooKeeper for Client-Server Machine Mapping

In the second approach, Apache ZooKeeper is used to store and manage the mapping of WebSocket clients to their respective server machines.

Pros:

- Dynamic Configuration: ZooKeeper enables dynamic management of server-client mappings, which is particularly useful in environments where servers are frequently added or removed.

- Enhanced Failover: In the event of server failure, ZooKeeper can facilitate the reassignment of clients to operational servers, thus supporting seamless failover and service continuity.

- System Resilience: The use of ZooKeeper, a distributed coordination system, enhances the overall resilience and fault tolerance of the WebSocket infrastructure.

Cons:

- Operational Complexity: Setting up and maintaining a ZooKeeper cluster is more complex than a simple load balancer setup. It requires specialized knowledge to manage the ZooKeeper ensemble effectively.

- Coordination Overhead: Frequent updates to the client-server mappings in ZooKeeper and the need to watch for these changes introduce additional overhead that can affect system performance.

- Scalability of the Coordination System: As the central component for managing mappings, the ZooKeeper cluster itself must be scaled and monitored appropriately to handle the load, introducing another layer that must be managed and scaled.

Load Balancer with IP-Based Hashing is a good fit for systems where user sessions are relatively stable, IP addresses do not change frequently, and the architecture favors simplicity over the flexibility of server assignments. ZooKeeper for Client-Server Mapping is preferable in dynamic environments where server instances frequently change due to scaling operations or in systems that require sophisticated failover capabilities. It is well-suited for complex, distributed systems where maintaining a consistent user-to-server mapping is critical for the application's functionality.