System Design - Design Rate Limiter

Functional Requirements

- The system should limit the number of requests within a given time frame.

- The system should return an HTTP 429 status code for requests that breach the threshold.

- In the event of a failure, it should either fail open (allow all traffic) or fail closed (block all traffic), based on predefined policies.

Non-Functional Requirements

- Latency: Low latency is crucial for a rate limiter as it is involved in processing every request made to the system. High latency can significantly slow down the overall response time of the system.

- Availability: A rate limiter is a critical component that can impact the availability of the entire system it protects. If the rate limiter goes down or malfunctions, it could inadvertently block all incoming traffic or allow excessive traffic, leading to system failure.

- Scalability: The rate limiter must scale efficiently to manage an increased number of requests without performance degradation as the system grows.

- Robustness: The rate limiter must be resilient to various types of failures, such as network issues, server crashes, or unexpected traffic spikes.

Capacity Requirements

- Assume there are approximately 1 million users.

- Assume we need to store the userId, timestamp, and counter attributes for each rate-limiting record. This implies a requirement of 16 bytes of storage per record (8 bytes for userId + 4 bytes for timestamp + 4 bytes for counter), resulting in 16MB of storage for 1 million users.

Alternatively, if we need to record every request, assuming a user makes 100 requests per day, it would require approximately 1.2 GB of daily storage.

High Level Design

Approach 1: Rate Limiter on the Server Hosting API Endpoint

In this approach, the rate limiting logic is integrated directly into the server that hosts the API. The API server itself is responsible for tracking and limiting the rate of requests.

Consequences

Pros

- No need for additional infrastructure layers.

- Lower latency, as there is no additional hop for the requests.

- No extra infrastructure costs.

Cons

- Potential to become a bottleneck as scaling the rate limiting logic requires scaling the entire API server.

- Consumes the API server's resources (CPU, memory), which may affect the API's performance.

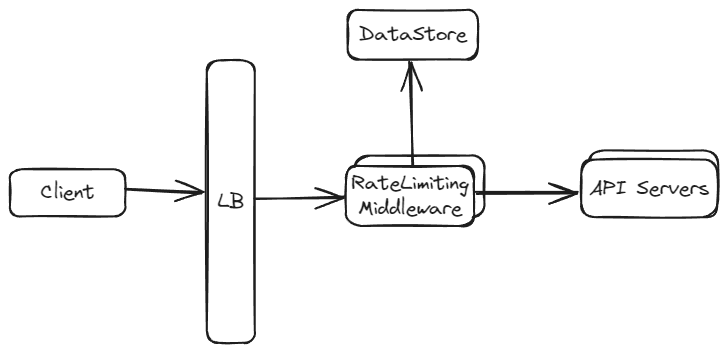

Approach 2: Rate Limiter as a Middleware

In this approach, rate limiting is implemented as a separate middleware layer, which could be a standalone service or a proxy through which all requests pass before reaching the API server.

Consequences

Pros

- Easier to scale independently of the API servers.

- Simplified management and monitoring of rate limiting policies across multiple services.

- Protects the API server from being overwhelmed by excessive requests and potential rate limiter failures.

Cons

- Adds an extra layer to the infrastructure, requiring more components to manage and monitor.

- Introduces an additional network hop, which could increase overall latency.

- May incur additional costs for running and maintaining separate infrastructure.

Design Dive Deep

Rate Limiting Algorithms

1. Fixed Window Rate Limiting

In Fixed Window Rate Limiting, a fixed interval (e.g., an hour, a day) is set as the time window. The request count is reset at the start of each new window. For example, with a limit of 100 requests per hour, the count resets once an hour has passed, regardless of when the first request was made in the previous window.

For this algorithm, we store the user record in the following format:

Key = userId:window (eg., ujjwalbhardwaj:12)

Value = count (number of requests)When a new request is received:

- Check if a key for the userId and window exists in the data store.

- If the key does not exist:

- Add a new record.

- If the key exists:

- Check if the counter breaches the threshold.

- If it does not breach the threshold, update the counter.

- If it breaches the threshold, return an HTTP 429 response.

- Check if the counter breaches the threshold.

Note: We can determine the window of a specific request by rounding down to the nearest window start time. For example, if a request arrives at 12:58:00, round it down to 12 and then check the key userId:12 in the datastore.

Pros

- Efficient in terms of memory and computational requirements, involving simple counter operations.

- Straightforward reset policy, easy for users to understand when their limit will be renewed.

Cons

- Can lead to uneven traffic patterns, as users might exhaust their limit early in the window and then send a burst of traffic when the window resets.

- For instance, a user making requests at 12:58:00 and 12:59:00 will still be able to make requests at 13:00:00 and 13:00:01.

2. Rolling Window Rate Limiting

Rolling Window Rate Limiting tracks the count of requests in a continuously moving window. For instance, if the window size is one hour and a request is made at 10:30, it counts all requests made since 9:30. The window rolls with each request, always looking back from the current time.

For this algorithm, we store the user record in the following format:

Key: userId

Value: [12:03:00] Sorted Set of timestamps When a new request is received:

- Check if a key for the userId exists in the data store.

- If the key does not exist:

- Add a new record.

- If the key exists:

- Remove all values from the set such that value < (currentTime - window).

- Check the size of the list. If it is greater than the threshold, return an HTTP 429.

- Otherwise, store the new timestamp.

Datastore

For the above two algorithms, we can use Redis to implement the datastore.

Redis Implementation for Fixed Window Rate Limiting

To implement the Fixed Window Rate Limiting Algorithm, Redis offers the following commands:

- INCR: Used to increment the counter for each request.

- EXPIRE: Sets an expiration time for the

userId:windowkey (e.g., an expiry of 1 hour).

Notable Features:

- Atomicity: Redis ensures atomicity with operations like

INCR. This means the count is reliably incremented for each request, even when multiple requests are being processed simultaneously. - Performance: Redis is highly performant and can handle a large number of operations per second, which is ideal for rate limiting scenarios.

- Expiration Handling: Redis automatically handles the expiration of keys. Once a key expires (i.e., the time window ends), it is removed. The next request will create a new key for the new window.

Redis Implementation for Rolling Window Rate Limiting

To implement the Rolling Window Rate Limiting Algorithm, Redis offers the Sorted Set data structure. We can use Sorted Set such that

- Key: userId

- Value: Sorted Set of Timestamps

Implementation Steps

- For a user's request at timestamp

1234567890, execute the following commands:ZADD user_requests 1234567890 1234567890: Adds the current timestamp to the sorted set.ZREMRANGEBYSCORE user_requests -inf (1234567890-3600): Removes requests older than 1 hour.ZCOUNT user_requests (1234567890-3600) 1234567890: Counts the number of requests in the last hour.- If the result of

ZCOUNTis greater than the predefined limit (e.g., 100), deny the request.